Summary: a demo on how to use fortran/blas libraries under the hood of your python program's vector operations to squeeze out extra speed over Numpy.

Yesterday, I posted a on how to use Apache Spark with GPUs from a notebook. To my joy, it reached the first page of Hacker News (while serving the Scala community!!!). Using Spark from one of the iPython notebooks has become a real passion of mine... and whereas yesterday I focused on Scala/JVM/GPU operations, today I want to offer a bit up to the scientific Python community. These discoveries are from studying a wonderful codebase by Radim Rehurek called Gensim... specifically the word2vec implementation.

You might be wondering why I would cover CPU based speedups following GPU based... and the truth is that sometimes lighter weight optimiations are a better fit... especially when dealing with smaller batches of vectors at a time or when GPUs simply aren't available.

Part 1: iPython-Notebook Cython Magic

Install Numpy,Scipy,GFortran,Cython, and scikit-learn packages. I HIGHLY recommend sticking to easy_install, brew (apt-get), and pip. In my experience, macports has some real trouble with these packages. Also, of course, you need to have ipython notebook installed for these examples to work, but technically it can work for normal cython too.

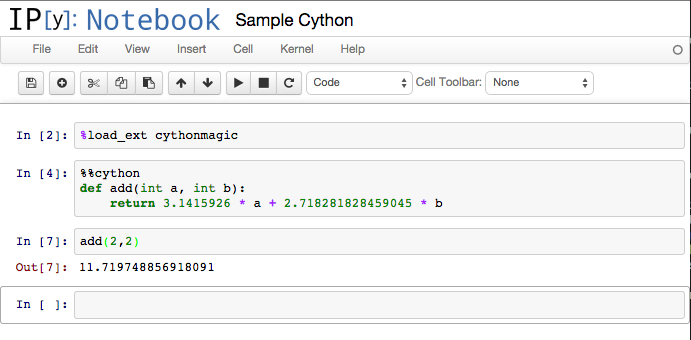

With Cython you'll get the "Cython" magic as well. The following command should work in your notebook.

Notice that you load the cython magic using "%load_ext cythonmagic" and then compile cython using "%%cython" at the top of the cell containing cython code. You can then call your cython functions (or classes... etc) from python. It's a neat system. :)

Part 2: Scipy Fortran-Blas in Cython

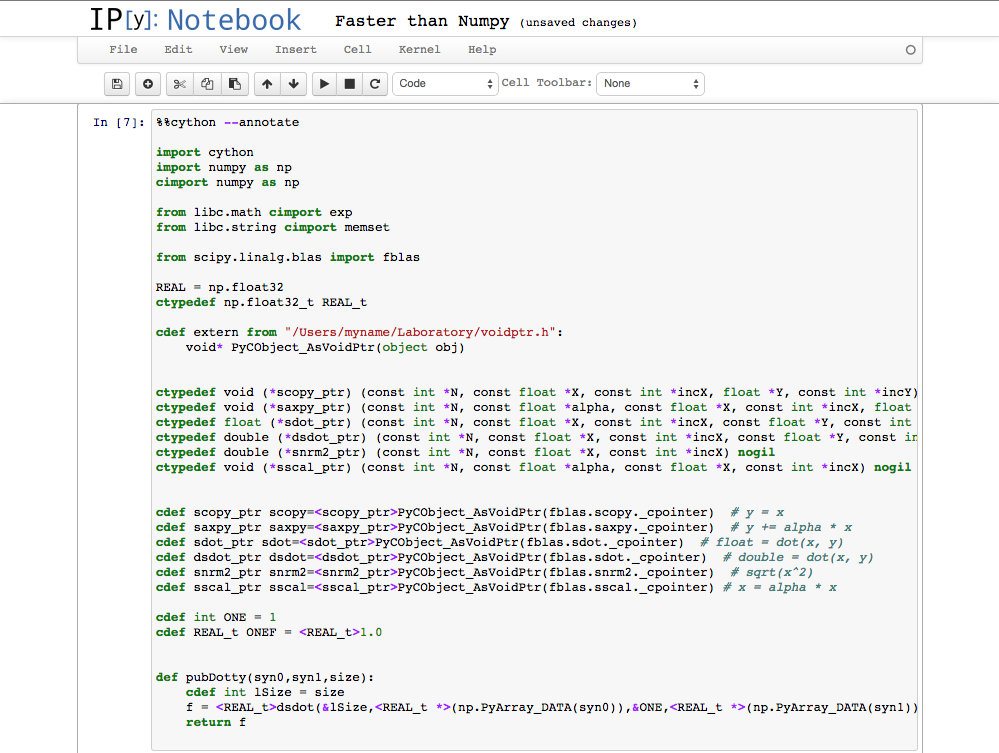

Below you'll see the core code that we need to get our superfast blas operations. After the first few imports, you'll see a "cdef extern from" import from a file called voidptr.h. This file allows us to cast a numpy array to its pointer without copying any data.... a key part of the code. The contents of that file are also below.

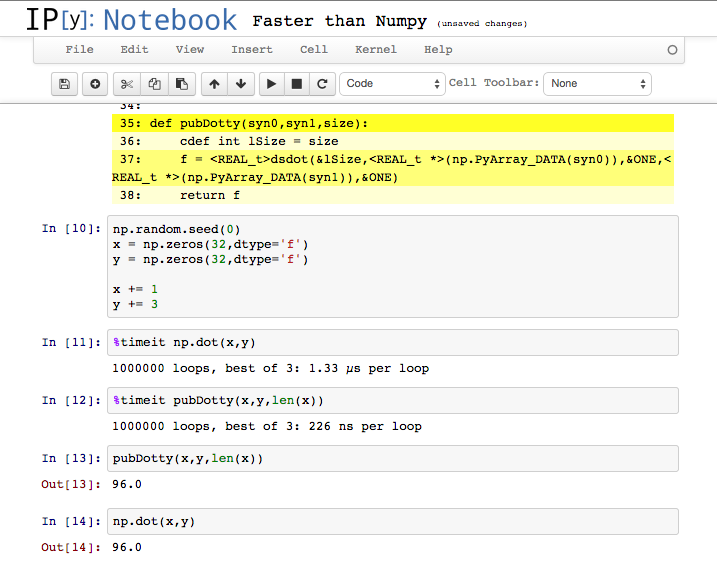

Next, you'll also see six function types and their implementations. There is a whole suite of these funky-named fortran functions in the Scipy Blas/Fortran Documentation I also write a simple dot-product function leveraging the dsdot (double dot product... as opposed to float) called pubDotty.

In this example, I create two numpy vectors of length 32. (one full of ones and another full of threes). I then benchmark and show how the cython/fortran version is 5.8x faster. It should be noted that this is still passing in a python object... this efficiency gain increases when everything stays in cython for several progressive operations.